EVER SINCE THE RISE OF COMMERCIAL 3D CAD in the early ’80s, engineering graphics has seen a steady pace of innovation, benefitting from Moore’s law and the evolving ecosystem of affordable graphics hardware driven largely by the gaming industry. While photorealism and fancy effects were never the focus in the world of CAD, things have evolved quite a bit from the early days of simple 2D wireframe graphics. The progress has been mostly iterative and predictable, focusing on improving performance for larger and larger models.

It is fair to say that in the last 5 to 8 years, new and disruptive technologies have emerged that have fundamentally altered the linear path of innovation that has dominated engineering graphics for CAD and particularly the field of Architecture for the preceding 20 to 25 years.

Virtual Reality

While some iterations of VR have been around since the mid-’90s, we have finally reached a point where the hardware has become more than practical. Putting on a VR headset for the first time can be a jaw-dropping experience. Despite lofty initial expectations, VR has clearly not taken over the world, yet there has been a steady rise in adoption.

David Heppelman from PTC often describes AR as “IoT for humans” with its ability to layer information on top of the real world, giving users virtual “superpowers,” allowing them to look through walls in a building and “see” the state of devices around them.

The benefits of entertainment and e-commerce are obvious, but VR’s advantages are clear even for architecture and industrial use-cases. Being able to virtually walk around and immerse yourself in a building long before it has been constructed or designing a car in a virtual 3D environment where the model appears in realistic proportions in front of you with real depth perception are obvious game-changers. Similarly, the ability to train your workforce remotely in a simulated environment simply by shipping a $400 device can result in massive cost savings.

Of course, challenges remain. VR headsets require the 3D scene to be rendered twice per frame with consistently high framerates to avoid discomfort, making it difficult to use with complex CAD or building models unless time-consuming preprocessing is employed to reduce triangle and entity count, a process that is hard to scale and automate. In addition, traditional input methods have limited use in a VR environment where the user cannot see his “real” keyboard & mouse, not to mention the ergonomic challenges of wearing a large bulky headset over extended periods of time.

Augmented Reality

Often grouped with VR, Augmented Reality has its own challenges and opportunities. It is fair to say that AR has broken into the mainstream on phones with entertainment-focused applications like Pokemon Go or Snapchat’s AR experiences and filters. While clearly being an inferior way to experience the technology compared to dedicated headsets, the mobile platform holders have invested heavily in AR. Apple and Google both offer their own AR libraries that utilize advanced hardware features like lidar to allow for more robust tracking.

Remote Support is possible with Vuzix’s M-series smart glasses enabling technical field professionals to tap into engineering support back in the office with integrated voice navigation, advanced Waveguide Optics with full-color LDP display, and more. This is an example of AR technology. (Image: Vuzix)

David Heppelman from PTC often describes AR as “IoT for humans” with its ability to layer information on top of the real world, giving users virtual “superpowers,” allowing them to look through walls in a building and “see” the state of devices around them. AR can help the plant supervisor quickly assess the maintenance state of machines in a factory with live data streaming into his vision via IoT, aid the facility management employee doing service work in a building, or the hotel worker inspecting a room. It can be used to “preserve” and pass on knowledge from “experts” to the rest of the workforce, guide new employees in training on their first days of work or offer assistance in repairing broken machines in the field.

Still, there is no doubt that everyone’s workplace will look very different in the next five to ten years, and the innovations happening in engineering graphics will be a big part of that shift.

From a Visualization perspective, AR in the industrial setting can often be useful without any real 3D graphics by overlaying data like text or graphs on top of the real world. Many more interesting use-cases like virtual work instructions benefit greatly from pulling in the underlying CAD data. AR also relies heavily on advances in computer vision and Artificial Intelligence (AI) for scanning features in the environment or reliably detecting a 3D model under different lighting conditions or when partially obscured.

Those advances in software and hardware will continue to drive innovation in this space and enable scalable experiences that will make it easier to go beyond prototypes and proof of concepts. On the hardware side, it will be the introduction of more powerful, comfortable, and cheaper AR headsets that promise to leverage AR’s value and utility fully.

Real-Time High-End Rendering

In engineering graphics, there used to be two very distinct paths. First, the functional” 3D was used by most engineering applications, which evolved from wireframe to simple flat shading to somewhat more advanced texturing and lighting. The other was the high-end offline rendering used largely by marketing departments.

About 5 years ago, a new technology derived from gaming and entertainment came along called PBR (Physically Based Rendering) that represents materials as a series of equations that model how light reflects off the surface and can be implemented very efficiently shader code running on the GPU. This technology makes it much easier for CAD applications to leverage vast material libraries to visually convey individual surfaces’ “real-world” characteristics much more realistically.

While PBR got CAD closer towards realism, there is still a vast chasm between real-time CAD and the glossy high-end renders traditionally created for marketing purposes, often utilizing applications like 3D Studio Max, Maya, or dedicated components like VRAY.

Physically-based-rendering was a big step forward, but now real-time raytracing technology is again accelerating visualization with both NVIDIA and AMD producing GPU-based real-time rendering acceleration.

Two years ago, Nvidia announced a technology called RTX that fundamentally changes that equation, allowing for real-time raytracing on consumer-level PCs, basically putting the visual quality of offline rendering within reach of any 3D engineering application. While it will take time for this technology to be adopted and graphics engines to be retooled to take advantage of it, the potential is there for making photorealism a commodity in the same way 3D graphics has become commonplace in the last few decades.

Artificial Intelligence (AI) plays an important role in improving this technology, allowing for up-scaling of images without loss in quality and better framerates with its ability to intelligently add details by understanding the content of a 3D scene based on training the AI on vast collections of real-world images.

Cloud and Connectivity

The shift to the cloud has been ongoing for some time, driven by the need for better collaboration and access to data for all stakeholders. That trend has only accelerated due to covid with remote working becoming the norm, at least for some companies. All this is only possible due to steady advancements in connectivity infrastructure culminating in technologies like 5G that vastly increase the available bandwidth and reduce latency.

Many of the technologies mentioned earlier are enabled or benefit directly from the rise of the cloud and connectivity. Deep learning algorithms operating on huge amounts of data in the cloud pulled from IoT devices are one example; smooth streaming of 3D content to any class of devices is another. In particular, for AR and High-End visualization, remote rendering on powerful servers in the cloud with dedicated GPUs is often used to overcome the limited power of mobile phones or AR headsets.

Tieing It Together

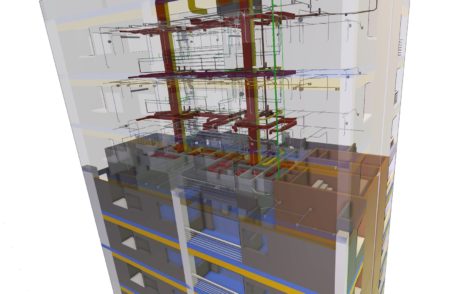

After conquering mobile and web (a shift that is still ongoing), 3D Engineering Graphics has broken out of the confines of the traditional flat 2D screen with VR and AR in the last few years, offering unparalleled levels of immersion while also becoming an extension of the real world, unlocking the power of IoT and the Digital Twin not only for knowledge workers but also for the people doing the actual physical work on the construction site or the shop floor. We are in the middle of this shift that is slowly making its way past the prototype and pilot project stages. Still, there is no doubt that everyone’s workplace will look very different in the next five to ten years, and the innovations happening in engineering graphics will be a big part of that shift.

About the Author

Gavin Bridgeman, Former CTO of Tech Soft 3D.

Gavin has nearly 30 years of experience in developing software tools for the Engineering Software market. He has a deep interest in the areas of Visualization, Data Translation, and 3D PDF and has played a key role in adopting Tech Soft 3D’s technology to cloud and mobile environments. Gavin has been closely involved in forging the close relationship with the Siemens PLM Components group that recently decided to resell the HOOPS Exchange technology to the Parasolid community. Gavin holds a B.A. in Mathematics from Trinity College, Dublin, and an M.Sc. in Industrial Applied Mathematics from Dublin City University.

Reader Comments

Comments for this story are closed