NVIDIA plans to present around twenty cutting edge AI (artificial intelligence) research papers at SIGGRAPH 2023. SIGGRAPH is one of America’s most important computer graphics conferences. This year it takes place between 6 and 10 August 2023, in Los Angeles, USA.

Not a hair out of place

The research papers that NVIDIA will present at SIGGRAPH 2023 describe the latest generative AI and neural graphics. These include NVIDIA’s collaborations with over a dozen universities in Europe, Israel, and the United States.

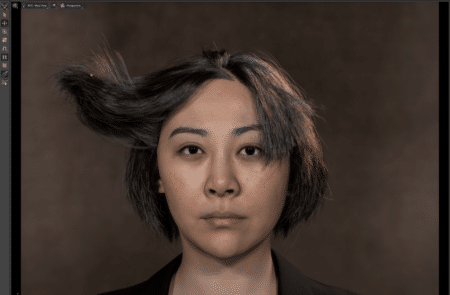

NVIDIA presentation for SIGGRAPH 2023 showing how AI can be used to make dynamic objects such as hair move realistically (Image NVIDIA.)

Once a 3D character is generated, artists can layer in realistic details such as hair. This is normally a complex, computationally expensive challenge for animators. Humans have an average of 100,000 hairs on their heads. Each hair reacts dynamically to an individual’s motion and the surrounding environment.

Traditionally, creators have used physics formulas to calculate hair movement, simplifying or approximating its motion based on the resources available. That is why virtual characters in a big-budget film sport much more detailed heads of hair than real-time video game avatars.

This paper showcases a method that can simulate tens of thousands of hairs in high resolution and in real-time using neural physics. This is an AI technique that teaches a neural network to predict how an object would move in the real world.

- To see a video showing how neural physics enables such realistic simulations, please click here.

Optimized for modern GPUs (graphics processor units)

The team’s novel approach for accurate simulation of full-scale hair is specifically optimized for modern GPUs. It offers significant performance improvements compared to state-of-the-art, CPU-based solvers. Consequently, it reduces simulation times from multiple days to a few hours. It also improves the quality of hair simulations possible in real-time. This technique finally enables both accurate and interactive physically-based hair grooming.

The SIGGRAPH 2023 papers include generative AI models that turn text into personalized images. These include:

- Neural physics models that use AI to simulate complex 3D elements with astonishing realism.

- Neural rendering models that unlock new capabilities for generating real-time, AI-powered visual details.

- AI tools that transform still images into 3D objects.

Customized text-to-image models

Generative AI models that transform text into images are powerful tools for creating concept art or storyboards for films, video games, and 3D virtual worlds. Text-to-image AI tools can turn a prompt such as “children’s toys” into almost infinite visuals that a creator can use for inspiration.

However, some artists may have a specific subject in mind. For example, a creative director for a toy brand could be planning an ad campaign around a new teddy bear and needs to visualize the toy in different situations, such as a teddy bear’s tea party.

To enable this level of specificity in the output of a generative AI model, researchers from Tel Aviv University and NVIDIA have two SIGGRAPH 2023 papers. These projects will enable users to provide image examples from which the generative AI model can learn.

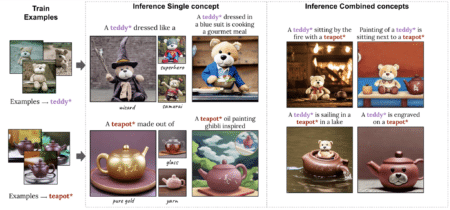

Examples of generative AI models personalizing text-to-image output based on user-provided images. (Image: NVIDIA.)

One of their papers describes a technique that needs a single example image to customize its output, accelerating the personalization process from minutes to roughly 11 seconds on a single NVIDIA A100 Tensor Core GPU. This is more than sixty times faster than previous personalization methods.

Their second SIGGRAPH 2023 paper introduces a highly compact model called Perfusion. This takes a handful of concept images to allow users to combine multiple personalized elements. For example, it can meld a 3D image, such as a specific teddy bear and a selected teapot, into a single AI-generated 3D image, as demonstrated above.

Neural rendering offers film-quality detail to real-time graphics

After an environment is filled with animated 3D objects and characters, real-time rendering simulates the physics of light reflecting through the virtual scene. Recent NVIDIA research shows how AI models for textures, materials, and volumes can deliver film-quality, photorealistic visuals in real-time for video games and digital twins.

NVIDIA invented programmable shading over two decades ago. This enables developers to customize the graphics pipeline. In these latest neural rendering inventions, researchers extend programmable shading code with AI models. These run deep inside NVIDIA’s real-time graphics pipelines.

Neural texture compression

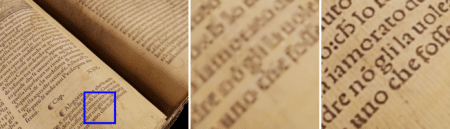

In another SIGGRAPH 2023 paper, NVIDIA will present neural texture compression. Neural texture compression can substantially increase the realism of 3D scenes. The image below demonstrates how neural-compressed textures (right) capture sharper detail than previous formats, where the text remains blurry (center). Neural texture compression (right) provides up to sixteen times more texture detail than previous texture formats. Moreover, it does so without using additional GPU memory.

Three-pane image showing a page of text, a zoomed-in version with blurred text, and an equally zoomed-in version with clear text. (Image: NVIDIA.)

A related paper announced last year is now available in early access as NeuralVDB. This AI-enabled data compression technique decreases the memory needed to represent volumetric data up to one hundred times. These effects can create clouds, fire, smoke, and water.

Sharing research

NVIDIA researchers regularly share their ideas with developers on GitHub. This includes the NVIDIA Omniverse platform for building and operating metaverse applications and NVIDIA Picasso. This is a recently announced foundry for custom generative AI models for visual design. Years of NVIDIA graphics research helped bring film-style rendering to games. These include the recently-released Cyberpunk 2077 Ray Tracing: Overdrive Mode game.

In conclusion

The cutting-edge AI research NVIDIA will present at SIGGRAPH 2023 should be of great interest to both developers and enterprises. The content of these papers will help engineers generate synthetic data to populate virtual worlds for robotics and autonomous vehicle training. They will also help creatives in art, architecture, graphic design, game development, and film.

- To learn more about SIGGRAPH 2023, please click here.

- More information regarding NVIDIA’s cutting-edge AI papers is accessible from here.

Reader Comments

Comments for this story are closed