THIS THIRD FEATURE FROM the NVIDIA GTC (GPU Technology Conference) wraps up what was a very healthy AEC track with speakers from many of the global architecture elite firms. Firms include Kohn Peterson Fox (KPF), CannonDesign, Gensler, HKS, Perkins + Will and others. They share their stories about how technology is changing the way they do business.

There was a big focus on interactivity with clients and how to have so many different offices in several countries to operate as one firm. I think it’s important to be aware of how these technologies work as they change how we think about platforms, servers, and real-time collaboration. There were many conversations about rendering on the GPU and how this is giving us access to new technology that facilitates real-time visualization. NVIDIA’s Denoiser gives you a great quality render in 1 or 2 passes instead of 8 or 16 by using AI to predict what it should be.

The role of AI and Deep Learning in pipelines can help us learn how data is used in a way that gives designers information about how rooms should be designed in an apartment building. VDI is connecting large firms who have offices all over the world; latency issues improve with VMWare and Citrix keeping competitive with updates and a faster worldwide web. Holodeck VR by NVIDIA is changing how VR is being utilized by creating a collaborative environment where architects, engineers, and clients can all interact in real time in a VR walk-through. GPU servers like the DGX-2 are creating smaller footprints for racked workstations by providing GPU power in a 350-pound box with 2 PFLOPS of power.

I wanted to go through some of these panels as the technological tools these firms are using, illustrates how much technology is changing and how powerful the new tools are.

The Future of Real-Time Experience Design

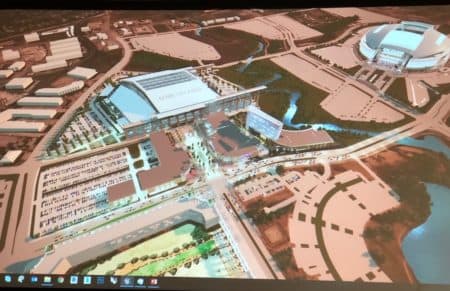

Epic Games presented a speaker panel to discuss the use of the Unreal Engine with partners using UE for high fidelity, interactive experiences for their customers. Owen Coffee from HKS presented their design of the new Texas Rangers Stadium “Globe Life Field” using the Unreal Engine. (image 01) Real-time models from Revit, Rhino and 3ds Max files were imported into the Unreal Engine and Lumion.

01 – Owen Coffee of HKS was talking about Unreal Engine in their pipeline. (image: Akiko Ashley/ Architosh. All rights reserved.)

Proprietary HKS developments enhanced the Unreal models, including a real-time crowd of 40,000 fans. Re-importation of design elements updates via large Revit-based data sets was a complex process where proprietary scripting in 3ds Max streamlined the process. On the screen, Owen Coffee was able to show how the camera moved through the model without latency even with all the fans in their seats. The Unreal Engine was also able to handle surrounding buildings including the stadium next door. (see image 02)

The Unreal Engine does run on the Mac, and if you connect your Mac to a virtual desktop environment (VDI), you have access to many of these tools.

next page: Designing Human-Centric Spaces with Holodeck VR and Machine Learning

Reader Comments

Comments for this story are closed